65. How Good Are We at Rating Our Own and the Performance of Others?

by Brooke Anderson

This article draws out some interesting observations from a recent 360-degree feedback process conducted at the middle manager level.

You may be surprised to learn that we aren’t always reliable raters of our own performance and the performance of other people.

As leaders and managers, we are called on to rate people on a regular, almost daily basis. We assess and make judgements on a person’s capability, performance, potential, employability and promotability. To do this, we rely on leadership capability frameworks, talent matrixes and performance management guidelines to help us determine whether someone is performing (or not) and what score to give them or category to place them in.

The reality is, however, that our ability to rate another person against abstract qualities such as their entrepreneurism, or how collaborative they are is affected by;

· our own understanding and experiences of these competencies;

· what we think looks good;

· our unconscious bias;

· the standards we apply as raters; and

· how we perceive and assess our own capabilities.

What our experience reveals

For over 15 years Yellow Edge has been administering 360-degree feedback processes for individuals, large cohorts and organisations. We have been privileged to observe how individuals assess and rate their own capabilities and impact in the workplace, in comparison to how they are rated by their managers, direct reports, and stakeholders (the feedback team).

We would offer some general observations:

1. Individuals tend not to rate themselves accurately.

In a recent 360-degree project, we found that middle level executive participants, compared to their feedback team, assessed themselves lower by an average of 0.43 points against all but one of the twenty-six capability and impact statements.

(Scoring 0- Not observed/assessed; 1- Rarely; 2 – Sometimes 3 – Generally; 4 – Almost Always 5 – Always)

Where the feedback team reported that the participant ‘almost always’ demonstrated the capability or achieved the impact, the participants themselves were more likely to say that that they were only ‘generally’ able to demonstrate the capability or achieve the desired impact.

This is a common feature and there are, of course, many possible explanations including a sense of modesty, a desire to stay safe, our predilection for negative self-talk, our pre-disposition towards feelings of imposter syndrome and raters’ tendency to be generous or lenient. Participants may also lack a basis of comparison to use in rating themselves and so choose to err on the side of caution.

While understandable, these reasons can impede the full effectiveness of 360-degree feedback because it potentially diminishes the value of one important data set.

More significantly perhaps, the mismatch in ratings could also mean that participants lack the self-awareness to rate themselves accurately. This is an important area for exploration and a coaching conversation, as greater self-awareness is central to any meaningful 360-degree feedback process.

To facilitate deeper self-awareness, greater exploration and a coaching conversation is necessary to understand the reasons behind any mismatch between the participant and the perspectives of the feedback group.

2. Proximity, visibility, relevance and opportunity are key variables impacting meaningful feedback.

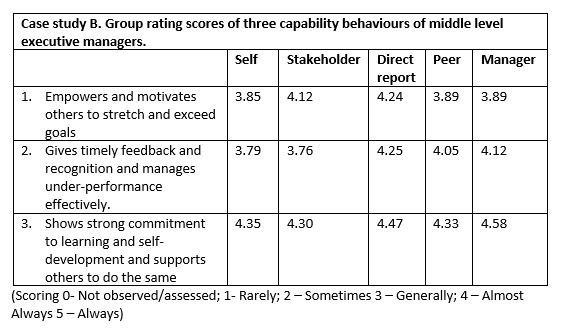

We can see from case study B above that the stakeholder group (i.e., individuals other than the manager or direct report) were the least likely of all the rater groups to rate participants highest against a capability statement. It may be because this rater group may be the least likely of all the rater groups to see and feel the participating manager’s impact. This may be that they are external to the organisation and not close enough to observe the day-to-day demonstration of capabilities or have not had the opportunity to see these capabilities fully demonstrated.

This could also be that the capabilities being rated are not fully relevant to external stakeholders. What may be important to internal feedback groups may not be as important to external stakeholder groups especially if they have their own particular focus of what is important to them, or they have a particular kind of stakeholder relationship (e.g. supplier etc).

It seems obvious, that proximity, visibility, relevance and opportunity to demonstrate capabilities will contribute to a rater’s ability to gauge the capability of participants.

To enable meaningful feedback, the factors of proximity, visibility and opportunity should be considered in the selection of external raters.

3. Rating individuals leaves out the potential impact of the team and context.

360-degree feedback processes are principally built on capability models which emphasise and articulate individual capabilities, with the assumption that the effective demonstration of these behaviours will result in successful results.

We know from firsthand field experience that individual leadership excellence does not necessarily mean results are achieved. Performance and the achievement of outcomes requires the collective effort of teams and other stakeholders. Individual results are subject to many variables within the individuals strategic and operating context.

When we rate an individual, we naturally rate that person as someone abstracted from the broader team and operational context within which they might operate and lead. Teams and context may have a positive, negative, indifferent or mixed impact on an individual’s ability to demonstrate capabilities. Indeed, the contemporary, dynamic nature of work is typically characterised by collective effort.

The goal of feedback, including 360-degree feedback in such a context is to bring about the best of someone in combination with the best of one’s peers so that the team can be led well to achieve its ambitions and goals.

Effective 360-degree feedback processes take into account the impact of one’s team and context on the demonstration of capabilities.

Important information:

No organisation or individual is identified in this paper.

Yellow Edge do not hold individual and team 360-degree feedback reports beyond the necessary time to administer and debrief the results.

We expunge all individual and team reports from our drives and systems once the project is completed.

Only Yellow Edge’s executive coaches and staff administering the 360 projects have access to the reports and solely for the purpose of administering the program.

On occasion, Yellow Edge makes a request to its 360-degree feedback technology partners to access de identified/anonymous 360-degree group-data for the purposes of developing reports for organisational leaders to assist with internal learning and development opportunities and to provide insights report such as this one.

Published December 2023

Brooke Anderson is Head of Research, Sustainability and Social Impact at Yellow Edge, a leadership development company focused on shaping human potential.

Yellow Edge is a local, privately owned Canberra based consulting company focused on helping individuals, teams and organisations to achieve high performance. Yellow Edge is a certified BCorp. BCorp companies make decisions that make a positive impact on their employees, customers, suppliers, community, and the environment. https://www.bcorporation.com.au/